If you wanted to deliver a package across the street and avoid being hit by a car, you could program a powerful computer to do it, equipped with sensors and hardware capable of running multiple differential equations to track the movement and speed of each car. But a young child would be capable of doing the same task with little effort, says Alex Demkov, professor of physics at The University of Texas at Austin.

"I guarantee you the brain is completely unaware that differential equations exist. And yet they solve the same problem of how to avoid collisions with fast approaching movement," Demkov said. "A child trains from a young age, tossing rocks to learn spatial dimensions, and can do things we can scarcely train powerful computers to do."

Not only that, the brain uses only a fraction of the energy computer chips require to accomplish the task, and can operate at room temperature.

Why is a physicist, with a background in developing new materials for advanced technologies, wondering about a child's brain? Because his research has recently led him into the world of neuromorphic computing, an emerging field that emulates the workings of the brain to perform the tasks we now use silicon-based digital processing to accomplish.

In the last century, computer processing power has grown rapidly, roughly doubling every two years (according to Moore's Law), and in the process has transformed our world. But in recent years, progress has slowed as chips reach the physical limits of miniaturization and require unsustainable amounts of electricity to operate.

Alternative paradigms — some new, some old — are getting a fresh look from researchers. Neuromorphic computing is one such paradigm, as is optical computing where light takes the place of electrons as the transmitter of signals. Demkov happens to be an expert in the latter, having worked for two decades developing novel electronic materials for technologies with support from the Air Force, Navy, and National Science Foundation, often in collaboration with IBM and other industry partners.

In recent years, Demkov has been developing hybrid silicon-photonic technologies based on novel nano-scale materials like silicon-integrated barium titanate, a ceramic material that exhibits exotic and useful properties for information processing.

The challenge with optical computing in the past has been the difficulty in making devices that are both controllable and small enough to use in devices. However, barium titanate, Demkov has found, can transmit light and be switched on and off, using a variety of clever mechanisms, using very little power and on a very small scale. Moreover, it can be fused to silicon to integrate into chips that can, for instance, provide the computing and control mechanisms for aircraft at a fraction of the weight of today's technologies.

Working with IBM and researchers at ETH-Zurich, Demkov recently demonstrated a system that is much more efficient than the current state of the art. The results were published in Nature Materials in 2018.

"Using new materials and old methods, we might be able to create new neuromorphic computers that are better than silicon-based computers at doing certain kinds of transformations," Demkov said.

Reconsidering the neuromorphic canon

The material and optical characteristics of processors are just a few aspects of the problem that need to be solved to develop a rival for silicon semiconductors, which has a century-long head start in research.

To go from a promising new material to a device that can rival and surpass silicon, quantum computers, and a host of other contenders, Demkov has pulled together a team of researchers from across UT Austin and beyond with expertise in neuroscience, algorithmic development, circuit design, parallel computing, and device architectures to create something that exceeds what leading industry groups like Google, Intel, and Hewlett Packard Enterprise are imagining.

"This is our competitive advantage," he said. "We have 30 brilliant, diverse researchers working on this problem. We have a good idea. And we are not beholden to existing technologies."

If the use of silicon digital processors is a problem at the technological end of things, troubles at a conceptual level start with the use of a mathematical formulation of thought first enunciated in 1954.

Traditional neuromorphic computing is rooted in the idea that neurons spike, or react, in order to communicate. This spiking takes the place of the on/off, 0/1 of digital gates – the root of computing.

However, neuroscientists have learned a great deal about the reality of neurons in the past six decades, which are much more complicated and interconnected than the old textbook description would lead one to believe.

"Hodgkin Huxley wrote this set of four equations based on the understanding of cell membrane and transfer circa 1954 and that's what brain science has been about ever since," Demkov said. "Now we have functional MRI, we have advanced microscopy, so we surely can develop better models."

Work by Kristen Harris, a leading neuroscientist at UT Austin who uses neuroimaging and computing to create detailed 3D models of the brain, led Demkov to the realization that the mathematical models of neuro-processing are due for a rewrite.

"Brains are not one bit machines," explained Harris. "Just based on the structure of synapses in the brain in different brain regions and under different activity levels, we've seen 26 different distinct synaptic types. It's not an on off machine. It has graded levels of capability. And is not digital; it's analog."

"The simple explanation needs to be rethought and rearticulated as an algorithm," Demkov concurred.

Harris was drawn to the project because of the diverse perspectives of the researchers involved. "They're all thinking outside the box. But they didn't realize how complicated the box was," she said. "We may not even speak the same language when we start out, but the goal eventually will be to at least understand each other's language and then begin to build bridges."

Separate from the behavior and logic of neurons themselves, a key part of the project is the development of improved neural networks — a method of training computers to "learn" how to do human-like tasks, from identifying images to discovering new scientific theories.

Neural networks, when combined with large amounts of data, have shown themselves to be incredibly effective at solving problems that traditional simulation and modeling are incapable of handing. However, today's state-of-the-art methods are still slow, require massive amounts of computer power, and have been limited in their applications and robustness.

Demkov and the UT team are eyeing new formulations of neural networks that may be able to work faster using non-linear, random connectivity.

"This particular kind of neuromorphic architecture is called the reservoir computer or an echo state machine," he explained. "It turns out that there's a way to realize this type of system in optics which is very, very neat. With neuromorphic computing, you didn't compute anything. You just train the neural network to use spiking to say yes or no."

Leading the device design effort on the project is Ray Chen, a chair in the Electrical and Computer Engineering department and director of the Nanophotonics and Optical Interconnects Research Lab at the Microelectronics Research Center.

As part of an Air Force-funded research project, Chen has been experimenting with optical neural networks that could use orders-of-magnitude lower power consumption compared to current CPUs and GPUs. At the Asia and South Pacific Design Automation Conference 2019, Chen presented a software-hardware co-designed slim optical neural networks which demonstrates 15 to 38 percent less phase shift variation than state-of-the-art systems with no accuracy loss and better noise robustness. Recently he proposed an optical neural network architecture to perform Fast Fourier transforms — a type of computations frequently used in engineering and science — that could potentially be three times smaller than previous designs with negligible accuracy degradation.

"A UT neuromorphic computing center would provide the vertical integration of different technology readiness level from basic science to system applications that can significantly upgrade the human and machine interface," Chen said.

The researchers on the team have a secret weapon at their disposal: the supercomputers at UT's Texas Advanced Computing Center, including Frontera and Stampede2, the #1 and #2 most powerful supercomputers at any U.S. university.

"These systems allow us to predict the characteristics of materials, circuits, and devices before we construct them, and help us come up with the optimal designs for systems that can do things we've never done before," he said.

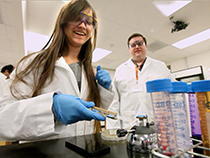

Down in the Material Physics Lab, which Demkov co-leads, he shows off a machine made of steel cylinders and thick electrical wires capable of creating ultra-pure silicon photonic materials — the only one of its kind in the world. There he builds and tests nano-scale components invisible to the naked eye that could one day be ubiquitous, allowing computation to further embed itself in our day to day lives.

Despite competition from the world's most powerful technology companies, Demkov believes UT has the resources and expertise to become the leader in neuromorphic computing.

"Most of the people in industry are working on this problem in a vacuum and are trying to gather ideas from their limited understanding of the literature," he said. "We have the engine which drives the literature in our midst, and together we can create something truly extraordinary."

Comments