A cross-disciplinary team including University of Texas at Austin statisticians Giorgio Paulon and Abhra Sarkar have received the Mitchell Prize, a top prize in the field, for their study modeling what happens in the brains of nonnative English speakers learning another language's tonal differences.

In Mandarin Chinese, for example, there are four ways to pronounce "ma," and each one has a totally different meaning. Say it with a certain tone and it means "mother." But beware — say it slightly differently and it means "horse."

These tonal differences are rife in Mandarin but nonexistent in languages such as English. For some nonnative speakers, tonal differences make Mandarin especially difficult to master. They also make it an ideal case study for understanding how the human brain rewires itself to learn new languages — which is what Sarkar, Paulon and their colleagues set out to discover.

"This is an ambitious goal, but this could help eventually develop precision learning strategies for different people depending on how their individual brains work," Sarkar said.

This is the second Mitchell Prize since 2018 for Sarkar, an assistant professor in UT Austin's Department of Statistics and Data Sciences.

The statistical method the team developed could also have applications in other areas of neuroscience research or in clinical practice. The prize is awarded annually in recognition of an outstanding paper that describes how a Bayesian analysis has solved an important applied problem. The award is sponsored by the American Statistical Association, the International Society for Bayesian Analysis and the Mitchell Prize Founders' Committee. Joined by Fernando Llanos and Bharath Chandrasekaran, Sarkar and Paulon were selected for their 2020 paper,published in the Journal of the American Statistical Association in September.

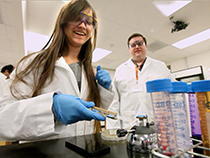

In their experiment, 20 adult English speakers were taught how to differentiate four different tonal variations that give syllables in Mandarin different meanings. Over the course of several days, participants listened to native speakers saying different versions of syllables and responded with which of the four tones they heard. They were told immediately whether they got a response right. As participants learned to differentiate the tones, they responded more quickly and more accurately. In other words, they began to learn one of the basic elements of Mandarin.

The statisticians developed a new way to model how the brain learns language over time and tested it on the data collected from the experiment. Traditional approaches are best suited to binary decisions in static situations. The new approach, however, is able to model the four-choice decisions associated with learning Mandarin tones and capture the biological changes that occur in the brain over time during learning.

"The outstanding contribution of this work was to eliminate these limitations by overcoming daunting methodological and computational challenges, thereby advancing the statistical capabilities many significant steps forward through the development of a novel Bayesian model for multi-alternative decision-making in dynamic longitudinal settings," Sarkar wrote in a summary for funders.

Using this new method, the statistics researchers found a couple of interesting things.

First, tones 1 (high level) and 3 (low dipping) were the easiest to learn to tell apart. That's because tone 3 is unique to Mandarin, and there is no equivalent among English phonemes. That insight could lead to better learning strategies.

"For example, poor learners may benefit from beginning with easy tones like T1 and T3 and making the training more challenging afterward with the introduction of tones T2 and T4," said Paulon, a graduate student in Sarkar's group.

To understand the second finding, it helps to understand the basics of how our brains learn to put things into categories.

"Learning to make categorization decisions is particularly crucial in our interactions with our environments," Sarkar said. "We have to decide whether a person is a friend or a foe, a plant is edible or nonedible, a word is 'bat' or 'hat.'"

When deciding what category something fits in, our brains accumulate sensory evidence by increasing the firing rates of neurons in certain areas. When neuronal activity crosses a particular evidence threshold, we have enough information to make a decision. As we learn something new, like a language, the rate of rising neuronal activity and the thresholds involved in those decisions change. Our brains become more experienced.

The second finding is this: When nonnative speakers who are especially good at learning Mandarin process audio information to decide which of the four tones they heard, their brains do it more quickly than those who don't learn the language as easily. That might seem obvious, but until this experiment, it was also a possibility that good learners simply need less information to accurately decide. In terms of the way groups of neurons arrive at a decision, being a better learner might depend on either increasing neuronal activity faster or lowering the evidence threshold, or both.

The researchers found that good and bad learners need just as much audio information to tell the four tones apart (the threshold stays the same); the good ones just process audio information faster.

When the research began in 2018, Chandrasekaran was an associate professor at UT Austin, and Llanos was a postdoctoral researcher in his lab. Since then, Chandrasekaran and Llanos moved to the University of Pittsburgh. Last year, Llanos returned to UT Austin as an assistant professor in the Department of Linguistics.

Sarkar credited Llanos for introducing him "to many new exciting scientific problems and ideas, including the previous literature on this problem, and also for collaborating very intensely on this project to make sure the statistical modeling accurately represents the underlying neuroscience aspects of the problem."

Beyond helping people learn new languages, Sarkar said their statistical model might assist clinicians in understanding why an individual has a speech or hearing disorder. It could also help neuroscientists studying other kinds of decision making, such as how we make sense of the visual world.

Comments