Imagine that you are a robot in a hospital: composed of bolts and bits, running on code, and surrounded by humans. It's your first day on the job, and your task is to help your new human teammates—the hospital's employees—do their job more effectively and efficiently. Mainly, you're fetching things. You've never met the employees before, and don't know how they handle their tasks. How do you know when to ask for instructions? At what point does asking too many questions become disruptive?

This is the core question that a team of researchers at Texas Computer Science (TXCS) have been trying to figure out. In a paper entitled "A Penny for Your Thoughts: The Value of Communication in Ad Hoc Teamwork," Reuth Mirsky, William Macke, Andy Wang, Harel Yedidsion, and Peter Stone "investigate how to design artificial agents that can communicate in order to be valuable members in a team."

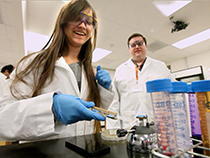

In "A Penny for our Thoughts," the research team explored the service robot example to highlight a situation where an artificial agent must learn to work with people with no prior knowledge of the collaborative environment. These service robots, who mainly have to retrieve supplies for physicians or nurses, have two main goals to balance: understanding the task-specific goals of their human teammates, and understanding when it is appropriate to ask questions over acting autonomously. As Reuth Mirsky explains, "we as people are very good at that most of the time." In part, this is due to a human's ability to reason about other people's motives through facial cues, body language, language, and a plethora of other explicit and non-explicit cues that can communicate intent. "We have it hardwired in our brains" that others have goals and act accordingly to accomplish them, and in collaborative environments, it's relatively easy to intuit how to help them or when to ask other people questions. This sort of logic is something that "you have to write down to the machine." The subtle nuances of human interaction that make it possible for people to get along have to be observed, analyzed, and transcribed into a logical framework that a machine can understand.

To help machines understand when to ask a question or when to act on their own, the team made the "assumption that asking something has a specific cost." To help reduce costs, the team defined theoretically the times when it was not appropriate to ask at all. If a robot has the same routine of checking inventory at a certain time, it wouldn't be helpful to ask about the task every time as there is no ambiguity about what the robot should do: it already knows the procedure, and the procedure is static. On the other hand, when the robot is tasked with something that varies significantly from a standard routine or when the robot is given a new goal, it becomes important to ask. "We are only interested in the window in the middle, when the robot needs to ask something that is not clear and if it won't ask, then it might take a wrong action," said Mirsky. Ultimately, the team framed the problem like this: "In order to be useful collaborators, the robot's main goal is to assist." From this main goal, the robot learns to choose its own goal, which may be fetching an item in a certain way or assisting in a different manner. "So if it understands the goals of its teammates, it can be a good helper. The queries are about the goals of the other agents. So, "Are you trying to do this, or are you trying to do that?" According to the answer [it] gets, [it'll] be able to act accordingly."

Mirsky, who completed her Ph.D. at Ben-Gurion University and had a heavy theoretical research background in the AI space, wanted to work on research that has material applications in the real world. She notes that with recent advancements in AI and robotics, more work is being done designing "human-aware AI," and that there is a necessity for researchers to design these types of "algorithms and to design machines that are able to collaborate." This desire led her to TXCS professor Peter Stone's AI Lab. "When I got to Peter's lab, because he's doing a lot of research in these areas and he cares a lot about doing something practical and something with use in the real world," she was inspired "to take some of my more theoretical research and apply it to a specific problem, a real problem."

The research team continues to study multi-agent systems and plans to use the insights from the initial publication to expand upon their work. "A Penny for Your Thoughts: The Value of Communication in Ad Hoc Teamwork" will be presented at the International Joint Conference on Artificial Intelligence (IJCAI).

This article has been cross-posted from the Department of Computer Science website.

Comments