Textbook descriptions of brain cells make neurons look simple: a long spine-like central axon with branching dendrites. Taken individually, these might be easy to identify and map, but in an actual brain, they're more like a knotty pile of octopi, with hundreds of limbs intertwined. This makes understanding how they behave and interact a major challenge for neuroscientists.

One way that researchers untangle our neural jumble is through microscopic imaging. By taking photographs of very thin layers of a brain and reconstructing them in three-dimensional form, it is possible to determine where the structures are and how they relate.

But this brings its own challenges. Getting high-resolution images, and capturing them quickly in order to cover a reasonable section of the brain, is a major task.

Part of the problem lies in the trade-offs and compromises that any photographer is familiar with. Open the aperture long enough to let in lots of light and any motion will cause a blur; take a quick image to avoid blur and the subject may turn out dark.

But other problems are specific to the methods used in brain reconstruction. For one, high-resolution brain imaging takes a tremendously long time. For another, in the widely-used technique called serial block face electron microscopy, a piece of tissue is cut into a block, the surface is imaged, a thin section is cut away and the block then imaged again; the process is repeated until completion. However, the electron beam that creates the microscopic images can actually cause the sample to melt, distorting the subject it is trying to capture.

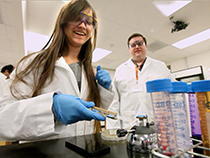

Uri Manor, director of the Waitt Advanced Biophotonics Core Facility at the Salk Institute for Biological Studies in San Diego, is responsible for running numerous high powered microscopes used by researchers across the nation. He is also tasked with identifying and deploying new microscopes and developing solutions that can address problems that today's technologies struggle with.

"If someone comes with a problem and our instruments can't do it, or we can't find one that can, it's my job to develop that capability," Manor said.

Aware of the imaging issues facing neuroscientists, he decided a new approach was necessary. If he had reached the physical limits of microscopy, Manor reasoned, maybe better software and algorithms could provide a solution.

Manor and Linjing Fang, an image analysis specialist at Salk, believed that deep learning — a form of machine-learning that uses multiple layers of analysis to progressively extract higher level features from raw input — could be very useful for increasing the resolution of microscope images, a process called super-resolution.

MRIs, satellite imagery, and photographs had all served as test cases to develop deep learning-based, super-resolution approaches, but remarkably little had been done in microscopy.

The first step in training a deep learning system involves finding a large corpus of data. For this, Manor teamed up with Kristen Harris, a neuroscience professor at The University of Texas at Austin and one of the leading experts in brain microscopy.

"Her protocols are used around the world. She was doing open science before it was cool," Manor said. "She gets incredibly detailed images and has been collaborating with Salk for a number of years."

Harris offered Manor as much data as he needed for training. Then, using the Maverick supercomputer at the Texas Advanced Computing Center (TACC) and several days of continuous computation, he created low-resolution analogs of the high-resolution microscope images and trained a deep learning network on those image pairs.

Success meant that samples could be imaged without risking damage, and that they could be obtained at least 16 times as fast as traditionally done.

"To image the entire brain at full resolution could take over a hundred years," Manor explained. "With a 16 times increase in throughput, it perhaps becomes 10 years, which is much more practical."

The team published their results in Biorxiv, presented them at the F8 Facebook Developer Conference and the 2nd NSF NeuroNex 3DEM Workshop, and made the code available through GitHub.

"Not only does this approach work. But our training model can be used right away," Manor said. "It's extremely fast and easy. And anyone who wants to use this tool will soon be able to log into 3DEM.org [a web-based research platform focused on developing and disseminating new technologies for enhanced resolution 3-dimensional electron microscopy, supported by the National Science Foundation] and run their data through it."

"Uri really fosters this idea of image improvement through deep learning," Harris said. "Right now, many of the images have this problem, so there's going to be places where you want to fill in the holes based on what's present in the adjacent sections."

With a proof of concept in place, Manor and his team have developed a tool that will enable advances throughout neuroscience. But without fortuitous collaborations with Kristen Harris, Howard and Monroe and TACC, it may never have come to fruition.

"It's a beautiful example of how to really make advances in science. You need to have experts open to working together with people from wherever in the world they may be to make something happen," Manor said. "I just feel so very lucky to have been in a position where I could interface with all of these world-class teammates."

This article is adapted as an excerpt from a longer story posted on the website of the Texas Advanced Computing Center.

Comments