In 2020, a deep learning generative model designed to turn low-resolution images into high-quality photos was posted online. When a user uploaded a low-resolution image of President Obama, though, it returned an image that is now referred to as 'White Obama.' The Face Depixelizer model, based on an algorithm called PULSE, was reconstructing images with predominantly white features, setting off a heated debate across social media.

Critics suspected biases in the data that were used to train the model. But a team of researchers at UT Austin found the problem was actually baked into the algorithm itself, as some news outlets have reported. It tended to amplify even a small bias in the data.

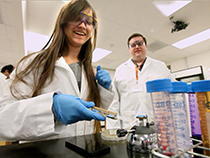

"It can be very discriminatory," said Adam Klivans, professor of computer science, director of the Institute for Foundations of Machine Learning (IFML) and director of the Machine Learning Laboratory.

The team, led by Alex Dimakis, professor of electrical and computer engineering and co-director of IFML, then demonstrated how to correct this tendency. Klivans said the same insights could help improve AI systems designed to remove noise from medical images.

Klivans and Dimakis were interviewed ahead of a public lecture about the work on the UT Austin campus this month, held by the Machine Learning Laboratory. They and their research were featured on local Austin TV station KXAN and on WWLP in Massachusetts: UT Austin researchers tackle AI that produced 'White Obama' image

Comments