One thing that sets humans apart from even the smartest of artificially intelligent machines is the ability to understand, not just the definitions of words and phrases, but the deepest meanings embedded in human language.

Alex Huth, a neuroscientist and computer scientist, is trying to build an intelligent computer system that can predict the patterns of brain activity in a human listening to someone speaking. If a computer could begin to extract the same kinds of meaning from a set of words as a human does, that might help explain how the human brain itself makes sense of language – and even pave the way for a speech aid for people who can't speak.

Experience an interactive 3D map of the human brain showing which areas respond to hearing different words.

Music for today's show was produced by:

Podington Bear - https://www.podingtonbear.com/

Have you heard the news? Now you can listen to Point of Discovery on Spotify. Hooray!

MA: This is Point of Discovery. Let me say a word—for example, "dog." When I say "dog," there's a little rush of blood to several tiny spots in your brain that specifically respond to that word. Hear another word—this time, "umbrella"—and there's a little rush of blood to some totally different spots in your brain. Now get this—the dog spots and umbrella spots in your brain, are in the same places as they are in my brain.

MA: How do we know that? In a series of studies, scientists have been putting people into MRI scanners—machines that can measure blood flow in the brain from moment to moment—and playing podcasts for them.

MA: As the people in the MRIs listened to different words and sentences, the scientists could see a wide range of brain areas light up, in response to the story. This and other studies have revealed really interesting things about the brain and language: First:

AH: A huge amount of the brain is involved in processing language in some way. Basically, it's easier to say the brain areas that are not involved in language processing than it is to say the brain areas that are involved in language processing. That's kind of bizarre and people had not really seen this before …

MA: That's Alex Huth. He was a postdoctoral researcher who worked on that study and is now a professor of neuroscience and computer science at the University of Texas at Austin.

AH: And all these areas aren't dedicated to just processing language, it's that language is hooking into other cognitive processes.

MA: A second insight is that there are regions of the brain that specialize in categories of words, such as numbers, places, people or time. Here's the weird thing—each word can trigger activity in more than one of these category regions. For example, when you hear the word "top," a little spot lights up within the brain region specializing in clothing and appearances, but so does a little spot in a brain region that has to do with numbers and measurements.

MA: These insights are interesting, but they still don't represent a complete picture of how our brains process language. It's a bit like knowing the ingredient list for eggplant parmesan but not having the actual recipe that tells you how the ingredients come together to make the dish.

AH: When words come together in a sentence or in a narrative, they mean much more than the single words on their own and how we combine words together to get meaning from speech as a whole instead of just words is, I think, the big unsolved problem in both the neuroscience of language and in computer science and natural language processing. Both fields are kind of struggling with the same question of how do you get at meaning of sentences or narratives.

MA: Huth and his team are now trying to build an intelligent computer system that can predict the patterns of brain activity in a human listening to language, like that podcast. Because if a computer could begin to extract the same kinds of meaning from a set of words as a human does, that might help explain how the human brain itself makes sense of language – and even pave the way for a speech aid for people who currently have no such options.

AH: we want to understand how the brain does this thing that is language, which is I think one of the most remarkable things that human brains do. But there are a lot of practical implications. Many people who have brain diseases or neurological diseases that have caused them to lose the ability to speak or lose the ability to communicate—I think it would be very valuable if we could use information that we learned about how the brain actually processes the meaning of language to build brain-computer interfaces that would allow them to communicate.

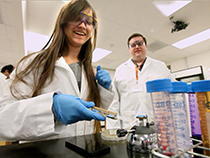

MA: But there's a catch—to train an artificially intelligent system, the researchers need hundreds of hours of brain activity data collected from people lying in MRI scanners listening to speech. And lying in an MRI has, traditionally, not been very comfortable. Huth and his graduate student Shailee Jain are actually subjects in their own experiment.

SJ: It's an okay experience if you remember that you're doing it for the science, but otherwise, it's a little uncomfortable because it gets claustrophobic and even the smallest movement in the MRI scanner is disastrous for our data.

MA: Now, thanks to Huth, lying perfectly still in an MRI scanner has gotten a whole lot easier.

SJ: The cool thing that we have to prevent motion is this thing that Alex came up with, with a bunch of his friends in grad school, which is called a head case and I think they have a really fancy company that manufactures those head cases.

MA: Oh yeah, I see it up on the shelf. It looks like a Spartan's helmet or something. It looks like it's made out of foam?

AH: The way it works is we actually take a 3D scan of each person's head … using a little handheld scanner and then we manufacture one of these sort of foam helmets for each person that is precisely carved on the inside to match the shape of that person's head and precisely carved on the outside to match the MRI scanner. So you put this helmet on, it's fitted perfectly to you so you can't move within the helmet and then once you lay down on the scanner, it kind of clamps on you so then you are interfaced perfectly to the scanner and you kind of can't move.

MA: A typical study in neuroscience might involve subjects spending an hour or two in an MRI scanner. Like astronauts trying to break a record for the most days in space, Huth and Jain hope to clock hundreds of hours in the scanner over a couple of years. With that amount of brain activity data, they hope to give their artificially intelligent system enough experience to understand human language.

MA: At the heart of their system is a neural network, which is sort of like an artificial brain. At first, it's like a bunch of neurons that aren't connected and don't know how to communicate with each other. But over time, using a technique called machine learning, they train the neural network to recognize how strings of words relate to patterns of brain activity in a human listener. Just like a baby's brain changes over time as it experiences people talking, the neural network slowly develops circuits of connected neurons that can process language information. Eventually, both human and machine are able to understand language. A key difference, though, is that the machine version allows scientists to experiment in entirely new ways.

AH: With a neural network we can actually look inside it and see what it's doing, right? We can do it to some extent with the human brain, but we can't, you know, arbitrarily break parts and see what effect that has. We can't observe all of the activity all the time like we can with a neural network. So just having the brain process translated into this artificial neural network gives us a lot more leverage to poke around and figure out how things work.

MA: Huth and Jain still have a lot of work ahead—collecting MRI data and building their system—but they've already built a natural language computer model that is somewhere in between the simple one we talked about at the beginning of the show that understands each word individually—and one that understands language more like a human does. Jain explains how the current version is a step in the direction of that more complex system.

SJ: Given all the words you've heard till now, it predicts the word that's going to come next, for example "switch off your [blank]" there is a good chance you're gonna say mobile or cell phone or something like that, right? So this blank is really easy to fill because you know what is likely to come next and the language model learns to be able to predict that blank to be able to fill that blank rightly you should have understood everything that came earlier. For example, nobody would say "switch off your cat" that doesn't make sense.

MA: This new contextual model is much better at predicting human brain activity than the simpler model that looks at words in isolation. Just like the simpler model, it confirms some brain areas are interested in only individual words …

AH: They care about what the sounds of the word is, or what the words are, they don't care about the context information. But there are a lot of parts of the brain that only respond when you hear the whole story or paragraphs in context.

MA: In fact, Huth and his research team have found many areas of the brain that will engage only when deeper meanings emerge. By paying attention to strings of words, rather than just individual words, their natural language model is getting closer to doing what only humans could ever do—truly understanding what another human is saying.

MA: Point of Discovery is a production of the University of Texas at Austin's College of Natural Sciences. Like our show? Then please review it on iTunes or wherever you get your podcasts. And now, that includes Spotify. To experience an interactive 3D map of the human brain showing which areas respond to hearing different words, stop by our website at pointofdiscovery.org. While you're there, check out photos of the head case that has made it much easier to hang out in an MRI scanner. You'll also find notes about the music you heard in this episode. Our senior producer is Christine Sinatra. I'm your host and producer Marc Airhart. Thanks for listening!

About Point of Discovery

Point of Discovery is a production of the University of Texas at Austin's College of Natural Sciences. You can listen via iTunes, Spotify, RSS, Stitcher or Google Play Music. Questions or comments about this episode or our series in general? Email Marc Airhart.

Comments